How Executive Protection and Corporate Security Teams Can Best Utilize AI

By TorchStone Senior Analyst, Ben West

Artificial Intelligence (AI) platforms are becoming increasingly accessible and better at recreating human language patterns. Industries across the board are adopting AI tools in efforts to increase efficiency and private security professionals can certainly benefit, too—if they are careful in how they do it. This edition of The Watch will provide some guidance and examples on how security professionals can utilize AI language generation tools like ChatGPT and Google Bard—and flag some actions that security professionals should avoid. As with all tools available to the security industry, AI platforms are only as good as the person who is using them. Such tools are still far from replacing a well-trained, experienced security professional, but they are helpful with the right expectations.

Present-day AI tools are good at taking over routine, time-consuming tasks so that professionals in their field can focus on contributing their more refined skills. Chances are, you or your company already use AI tools in your day-to-day work. TorchStone uses a variety of threat detection and management platforms that use AI to identify emerging threats through social media channels, monitor live imagery and alert when firearms are visible, and identify connections between threatening individuals. A human operator could do these jobs, but it would require hours of sifting through information to find one or two relevant data points. Such work leads to fatigue and burnout, increasing the likelihood that the analyst will miss something as the job drags on.

AI technology tools help the human operator focus on the information that is most relevant to their mission by filtering out the noise. To be clear, human judgment is still critical in determining which signals are threats and which are noise, and then how to best respond to that signal. At this point, AI is a valuable assistant and can help human operators be more efficient at their job, but it is no replacement for good training and judgment.

What AI-Language Generation Tools Are Good For

- AI language generation tools like ChatGPT or Google Bard provide great starting points for a project. Users can enter broad, generic queries and responses can provide good places to kickstart the brainstorming and planning process of a new project.

- While general AI tools available to the public don’t know more about security than a security professional already knows, they can offer introductions to other sectors that security professionals work parallel to. For example, AI tools can be a great starting point for quickly getting up to speed on security threats to other sectors such as finance, retail, and entertainment.

- AI tools can act as a writing tool, helping craft report sections or summarize longer sections. Users can simply copy and paste articles into the prompt along with directions to summarize into a paragraph of specific word count. This can be very helpful in crafting reports.

What AI is Not Good For

- First and foremost, security professionals need to be wary of using sensitive information in AI prompts. Most AI tools include clear warnings against using Personally Identifiable Information (PII) or even specific names in queries. Publicly available AI tools are not secure databases. Queries and responses are generally open to researchers and developers working to improve the platforms, so any kind of sensitive information or PII is visible to unauthorized persons.

- They are not fact-checkers or analysts and users need to double-check any information generated by an AI tool. Popular AI platforms are geared towards synthesizing information available online to create natural-sounding text and speech patterns. As demonstrated in the examples below, AI platforms such as Google Bard have completely fabricated security incidents that sound plausible but have no factual basis. Other platforms, such as ChatGPT, are more upfront about their inability to generate factual data.

Case Study: AI Generation

As mentioned above, AI language generators are helpful when it comes to educating oneself on areas outside your expertise. One way that security professionals can use AI to assist their work is through compiling travel security assessments. Such assessments typically require specific knowledge of a location and/or event that a security professional may be unfamiliar with. While AI language generator tools can’t write a quality report for you, they can help draft an outline and identify some issues to focus on.

In this example, imagine that we are writing a travel security assessment for a technology company CEO who will attend the Mobile World Conference in Barcelona, Spain. Following best security practices, we keep queries generic and do not include any specific information about the CEO, including name, PII, or specific travel plans. This example will use responses from Google Bard and ChatGPT.

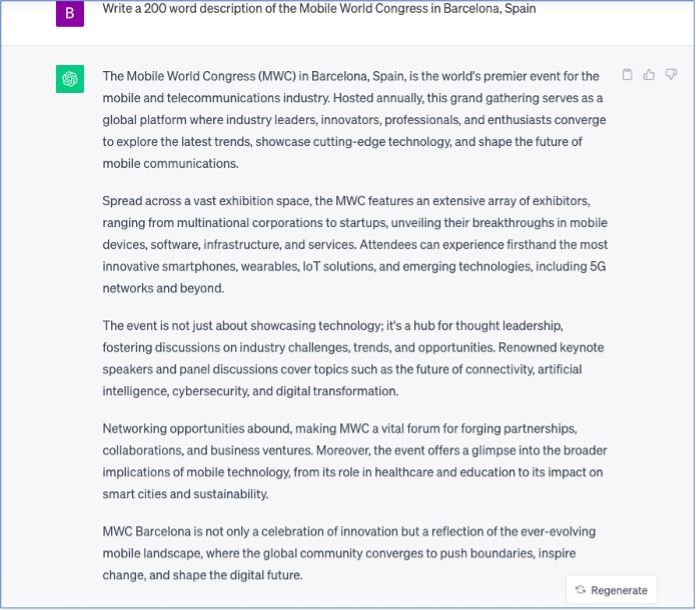

We begin with a general description of the Mobile World Congress in Barcelona, Spain. An AI language generator is great for setting the scene. Below is a response to the ChatGPT query, “Write a 200-word description of the Mobile World Congress in Barcelona, Spain.”

With some light editing, this response could be easily inserted into a travel security assessment as an introduction to the event.

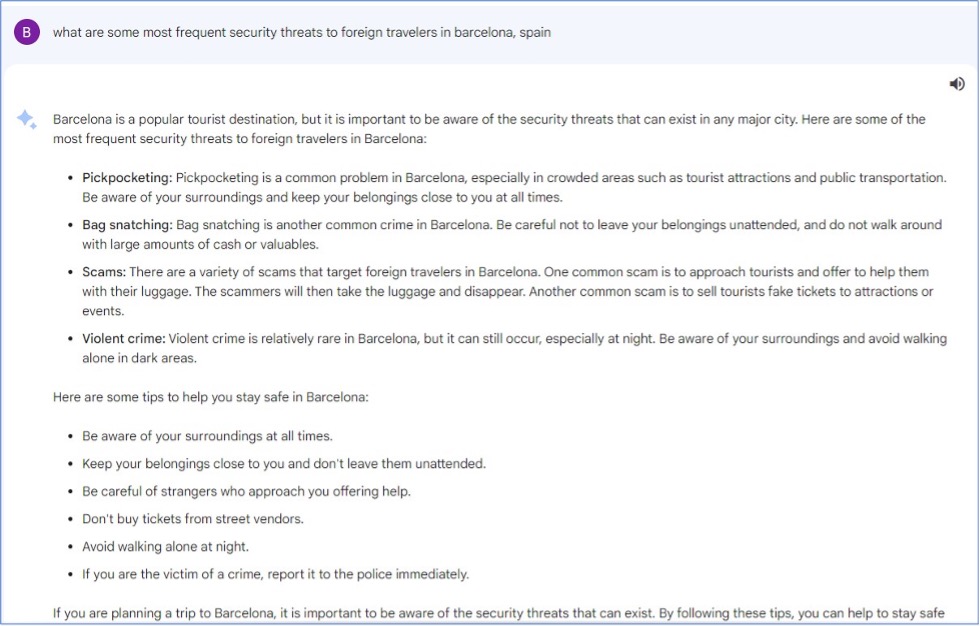

Moving on to the more security-oriented questions, we ask, “What are some most frequent security threats to foreign travelers in Barcelona, Spain?”

The results offer a good starting point, highlighting the common threats of opportunistic street crime such as pickpocketing, bag snatching, and scams. It accurately notes that violent crime is “relatively rare.” As a comparison, the ChatGPT response provided more details on areas of higher criminal activity, mentioning La Rambla and the Gothic Quarter as areas of particular concern. However, results from both platforms are generic. To build a more customized report, we drill down into some more specific queries about threats to trade conferences.

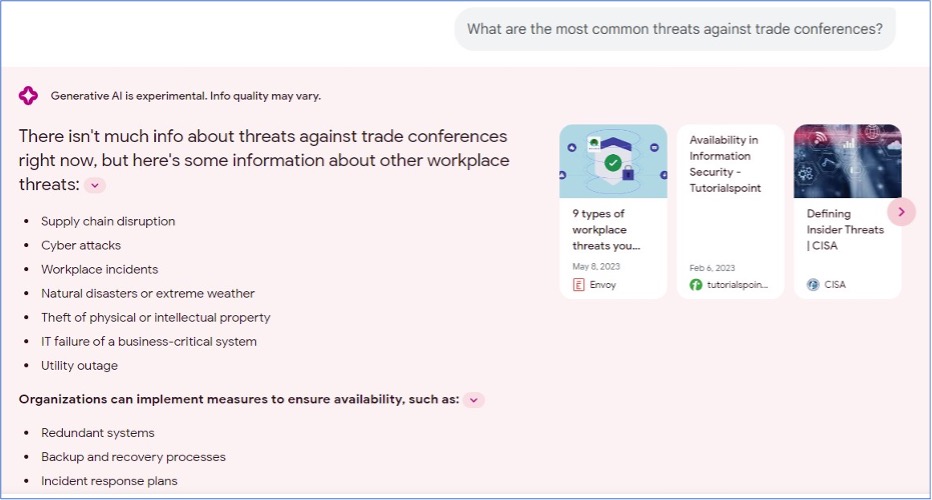

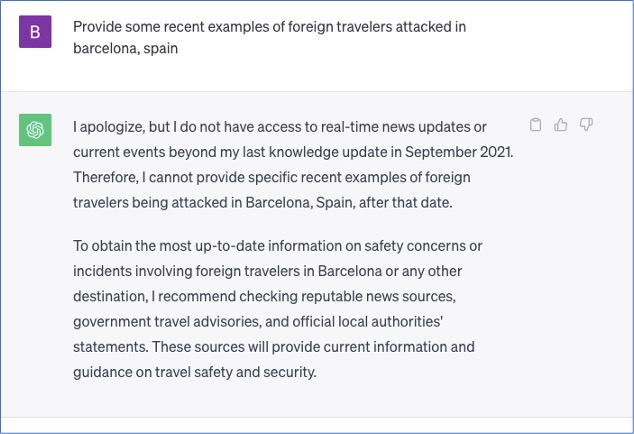

This response provides some more specific threats to consider building out a report, but it’s lacking in detail. However, once we start trying to identify specifics, the platforms’ weaknesses really begin to show. For example, below is the response to the query, “Provide some examples of foreign travelers attacked in Barcelona, Spain along with links to the original news reports.”

While the results sound credible, there are no reports of any such incidents happening on or around the dates provided in the response. The response cites reliable news outlets but does not provide any links to actual reports. It appears that the platform generated plausible-sounding answers but not accurate ones. This is why it is critical to have a discerning human operator proofread the results of AI-generated queries.

To its credit, ChatGPT is more transparent about its limitations in its response to a similar query.

Not a Stand-Alone Tool

As with any new tool, it is important that operators understand their limitations as well as their capabilities. AI language generators can save time by helping human operators plan and write easy-to-read reports, they can assist in the brainstorming stage of a project, or they could be used to write training manuals for new hires. However, human operators need to carefully review AI-generated results and use their own research and best judgment to correct AI responses that are not accurate.